Tuesday 18 February 2020 – Living with Machines (Turing Institute, British Library) – Maps and Machines: Using Computer Vision to Analyse the Geography of Industrialisation (1780-1920)

This seminar is 5:30 pm – 6:30 pm, 18 February 2020, in Foster Court Room 235, UCL. Foster Court is off Malet Place, part of UCL’s Bloomsbury Campus, London, WC1E 6BS (not at our usual Senate House venue). It will also be livestreamed on YouTube.

Session chair: Justin Colson/ Richard Deswarte

Abstract

Living with Machines is a research project that seeks to create new histories of the lived experience of industrialisation in nineteenth-century Great Britain. Because the vast archives of this period have been challenging to interrogate at scale, we use computational methods to explore newspapers, maps, census records, novels, and more. In this presentation, we will introduce our work using computer vision to automatically identify information in Ordnance Survey maps.

One of the origin stories of spatial history involves historians turning tabular data into maps that could be analysed with GIS. Newer research has turned from tabular to text data as a source of information that can be mapped. Very recently, some scholars have approached maps as data by manually creating datasets that organise cartographic information in machine-readable forms, while even fewer are exploring methods for computational analysis of historic maps. We aim to push this spatial humanities work further in two ways: 1) building up the opportunities for intersecting tabular, text, and visual historical data and 2) automatically creating data from maps.

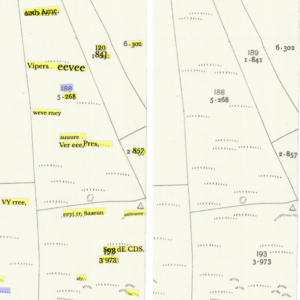

The early OS maps are a unique serial, partially digitised corpus. Our sample includes images and metadata from nineteenth- and early twentieth-century OS maps shared by the National Library of Scotland (8,765 sheets out of approximately 203,000 maps from seven different editions and scales printed between ca. 1840-1900) as well as the recently released GB1900 dataset. We kicked off our research with a series of learning days designed around historical research questions (How does the presence of machines impact lives differently in different places during the Industrial Revolution?), issues of source bias (How do cartographic sources represent rapid industrialisation?), and methodological challenges (How well do existing computer vision methods work on nineteenth-century maps? How can we establish ‘ground truth data’ in a way which is sensitive to ambiguities?).

Analysing historical maps in this manner allows us to depart from older methodologies dependent on spatial analysis of vector data. Reimagining data extraction from maps also means adapting investigations to accommodate only partial recall of information documented on the maps. For example, using maps to identify where machines and machine infrastructure is located in relation to other kinds of features (homes, schools, forests, hospitals, etc.) is one task that enriches what we can know about the shape of nineteenth-century communities, but will never perfectly reproduce the map content. Working at scale, we will nevertheless be able to use information about the presence or absence of certain features as a link to the lived experience that we read about in newspapers. Rather than organise project resources around vectorisation of specific map features, we are exploring how much state-of-the-art computer vision techniques based on deep learning can be ‘translated’ to help answer questions of historical interest. We aim to take a holistic view of the map contents and see how these can be cross-referenced with spatial information we identify in text or tabular data.

Speaker biographies

Daniel C.S. Wilson is a historian of modern Britain, with a focus on science and technology. He has degrees in History and Philosophy, and has held research fellowships in Cambridge and Paris. Prior to joining The Alan Turing Institute, Daniel taught in the Department of History and Philosophy of Science at Cambridge, where he also worked on the ‘Technology & Democracy’ project at CRASSH: an inquiry into the politics of the digital

Daniel van Strien is a Digital Curator for Living with Machines. He is particularly interested in the use of Natural Language Processing methods on historic collections, the use of Deep Learning for researching and managing digital collections, and Open Science approaches to carrying out research and dissemination. Prior to joining the British Library, Daniel has extensive experience working on Research Data Management and Open Science, has contributed to the development of Library Carpentry and worked in specialist medical and legal library services.

Kaspar Beelen is a digital historian, who explores the application of machine learning to humanities research. After obtaining his PhD in History (2014) at the University of Antwerp he worked as postdoctoral fellow at the University of Toronto on the Digging into Linked Parliamentary Data (Dilipad) project. In 2016, Kaspar moved to the University of Amsterdam where he first worked as a postdoc for the “Information and Language Processing Systems” group, and later became assistant professor in Digital Humanities (Media Studies). Since February 2019, he works at the Turing Institute as research associate for the Living with Machines project.

Katie McDonough is a historian of eighteenth-century France working at the intersection of political culture and the history of science and technology. She completed her PhD in History at Stanford in 2013. She has taught at Bates College and was a postdoctoral researcher in digital humanities at Western Sydney University (Australia). Before joining the Turing Institute, Katie was the Academic Technology Specialist in the Department of History/Center for Interdisciplinary Digital Research at Stanford University.